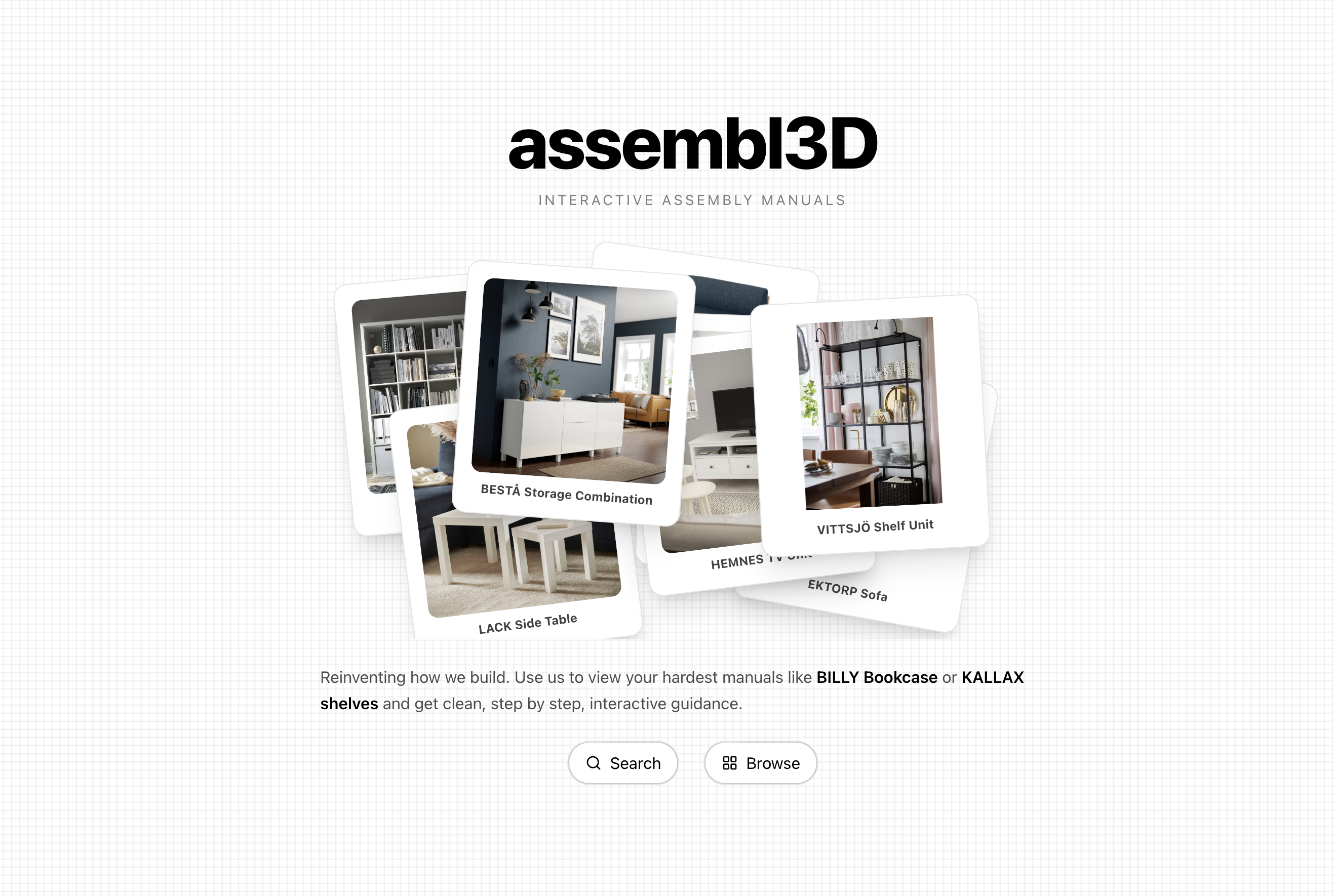

assembl3D

copilot for furniture assembly

Contents

- intelligent pdf scraping & processing

- ai vision analysis with gemini

- interactive 3d visualization

- real-time assembly chatbot

- 50+ product library

description

assembl3d is basically a copilot for putting together ikea furniture (or any furniture really). i built this at cal hacks 12.0 with my team because we were tired of staring at confusing 2d assembly manuals that look like hieroglyphics. the idea was simple: take any furniture manual pdf, use ai to understand what's going on, and render the steps in interactive 3d so you can actually see what you're supposed to be doing.

the first part was getting the manuals themselves. i used bright data's apis to automatically scrape product info from ikea.their serp api searches google for products, web unlocker downloads protected pdfs, and we cached everything to avoid re-downloading. we ended up with a library of 50+ popular ikea products that you can browse through, or you can just paste any product url and the system will grab it for you.

the harder part was making sense of the pdfs. assembly manuals are almost entirely visual with tiny diagrams with arrows and numbers everywhere. we tried text extraction at first but it was basically useless. the pivot was converting each pdf page into a high-res image and feeding it to google gemini. with some careful prompting, gemini could actually parse out the assembly steps, parts list, quantities, tools needed, and even spatial positioning. getting the ai to understand complex ikea diagrams and spit out structured json was probably the biggest technical win of the project.

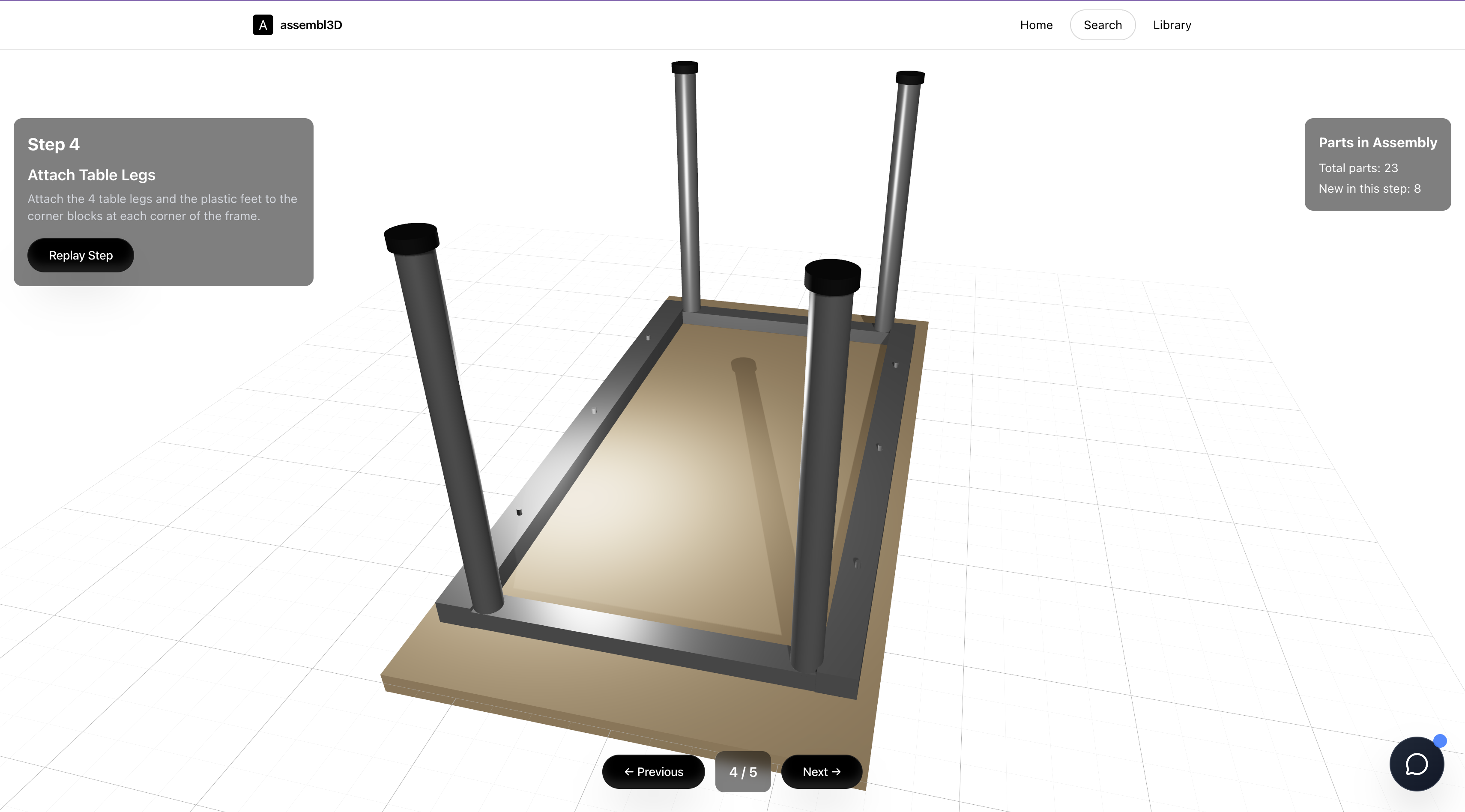

once we had the structured data, i built the 3d visualization using react three fiber. instead of using pre-made 3d models (which would've limited us to specific products), we generate geometric primitives procedurally based on the dimensions gemini extracts. so you can render any part type on the fly like screws, wooden slabs, brackets, whatever. the viewer lets you step through the assembly process, rotate the camera around with orbit controls, and see exactly how each part fits together.

i also added a reka ai chatbot that answers questions about the current step. like if you're confused about which screw to use or how a part should be oriented, you can just ask and it'll help you out. it has context about the entire assembly process and the specific step you're on, so the answers are actually relevant.

performance was a real challenge. rendering 50+ parts in 3d got laggy fast, so i spent time optimizing with low-poly primitives, frustum culling, and lazy loading. also had to deal with coordinate system conversions as pdf coordinates don't map directly to three.js 3d space (y-up vs z-up), which was annoying to figure out.

rate limits were another pain point. gemini has a 60 requests/minute limit and bright data charges per request, so i implemented 500ms delays between api calls and md5-based caching to avoid reprocessing the same files. definitely learned a lot about managing api costs and optimizing scraping workflows.

the final product looks pretty polished with smooth animations, responsive design, and it actually works well across devices. built the whole stack with typescript, next.js, and tailwind for the frontend, express and node for the backend, and integrated gemini and reka for the ai stuff. won some recognition at cal hacks which was cool. honestly just proud we built something that actually solves a real problem instead of yet another generic chatbot.